In the rapidly evolving world of AI-driven customer service, chatbots have become an indispensable tool for businesses aiming to enhance user engagement and streamline operations. However, the success of a chatbot hinges on its performance, which is why rigorous testing is essential. This guide delves into the strategies and best practices for chatbot testing, ensuring that your chatbot, like Galadon, is not only functional but also delivers a seamless and effective user experience. From understanding testing fundamentals to leveraging analytics for continuous improvement, this guide covers everything you need to know to ensure your chatbot meets and exceeds user expectations.

Chatbot testing is like giving your chatbot a check-up before it talks to real people. It's a way to make sure your chatbot can handle conversations without messing up. Think of it as training for your chatbot so it can be its best when chatting with users. Just like people practice before a big game, chatbots need practice too.

Remember, testing isn't just a one-time thing. It's important to keep checking your chatbot even after it's out in the world. This helps catch any new mistakes and keeps the chatbot sharp.

Before a chatbot steps into the world, it's got to pass some tests. Think of it like a robot's final exam. There are three main types of tests: general, domain-specific, and limit tests. These are the big checks to make sure your chatbot can handle what's thrown at it.

General Testing is all about the basics. Can your chatbot say hello and keep the chat going? If it can't, users might just say goodbye and bounce, which is bad news for your chatbot's report card.

Domain-Specific Testing gets into the nitty-gritty. It's where you make sure your chatbot knows its stuff, like if it's selling shoes, it better know a sneaker from a slipper.

Limit Testing is like seeing how much weight your chatbot can lift. It's about pushing boundaries and making sure it doesn't crack under pressure.

Remember, these tests aren't just a one-time deal. After your chatbot goes live, you've got to keep testing to make sure it's still on its A-game. It's like a car; you don't just check the engine once. You've got to keep giving it tune-ups.

And hey, whether it's before launch or after, you've got options like automated tests that do the heavy lifting for you, and A/B testing to see what works best. It's all about keeping your chatbot sharp and ready for chat.

Agile development is a big deal when it comes to making chatbots better. It's not just about building the chatbot and saying 'done.' It's about making sure the chatbot can keep getting better over time. After the chatbot starts talking to people, it's super important to see what's working and what's not. This means always checking on how the chatbot is doing and being ready to make changes fast.

Remember, the real test for a chatbot starts when real people use it. That's when you see if it's really helping or if it needs a tune-up.

With agile, you're always ready to fix things and add cool new stuff to your chatbot. This way, it can handle all sorts of chats, even the ones you didn't think about when you first made it.

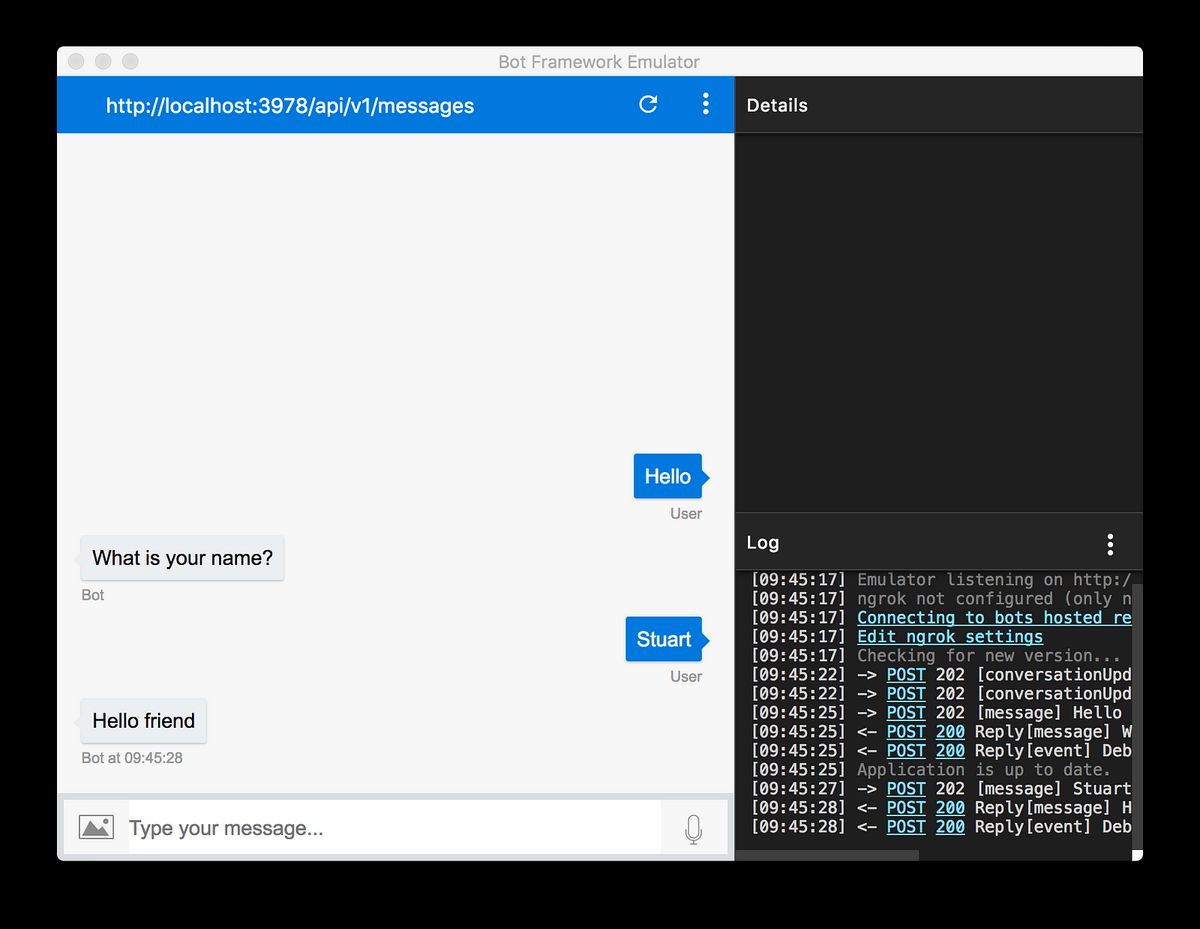

When we talk about making sure our chatbot works like a charm, we start with unit testing. This is where we check each part of the chatbot on its own. Imagine you're building a robot and you want to make sure each arm and leg works right before you put it all together. That's what unit testing is for chatbots.

Here's a simple way to think about it:

Remember, even the smartest chatbot needs to be tested bit by bit to ensure it's ready for real conversations.

After we test all the pieces, we put them together and see if the chatbot can handle a full chat. It's like making sure all the robot's parts work together so it can walk and talk without tripping over. And just like robots, chatbots need regular check-ups to stay in tip-top shape!

After unit testing individual chatbot components, it's time for integration testing. This is where we make sure all the parts of our chatbot work well together. Imagine you've got a bunch of puzzle pieces, and now you need to see if they fit to make the right picture. That's what integration testing is like for chatbots.

Here's what you might check during integration testing:

Remember, the goal is to catch any issues before real users start chatting with your bot. You want to find and fix problems so that talking to your chatbot feels smooth and helpful.

There are various tools for chatbot testing. Some of them you can assess for your business are Testsigma, Botium, Botpress, Qbox, and Chatbottest. It's like having a toolbox where each tool has its own special job for making your chatbot better.

User Acceptance Testing, or UAT, is a key step in making sure your chatbot is ready to chat with real people. It's like the final exam for your chatbot, where real users get to test it out and see if it meets their needs. UAT checks if the chatbot is easy to use, gives the right answers, and makes users happy with its help.

When you're doing UAT, think about these things:

Remember, the goal of UAT is to find any big problems before your chatbot starts chatting with everyone. It's your chance to make sure the chatbot does a great job and that people will like using it.

After you've checked all these things, you can be more confident that your chatbot is ready to go live. And if you find any issues, you can fix them before they become big headaches for your users.

When it comes to chatbot testing, there's a big decision to make: should you go for automated testing or stick with manual? Automated testing tools follow a strict pre-written line of code while executing test cases, which means they're super consistent. They test every line of code against the script they've been given. But manual testing has its place too. It's all about the human touch, like using Amazon's Mechanical Turk to add some human smarts to the process.

Here's a quick look at the pros and cons of each method:

Remember, it's not about choosing one over the other. The best approach is to use both automated and manual testing to cover all your bases. Automated tests can handle the grunt work, while manual tests bring in the human perspective that's so important for chatbots.

A/B testing is like a science experiment for your chatbot. You take two versions of your chatbot and test them with real users to see which one does a better job. It's all about making your chatbot the best it can be. You might change how the chatbot talks or what it says, and then watch to see which version users like more.

Here's how you can start A/B testing your chatbot:

Remember, A/B testing isn't just a one-time thing. Keep testing and tweaking your chatbot to make it even better over time. And don't forget, sometimes the chatbot might get things wrong. That's okay! It's all part of the process to help your chatbot learn and grow.

In the world of chatbots, continuous testing and feedback integration are key to making sure your chatbot is always learning and improving. Think of it like a video game where the levels get harder as you go. Your chatbot needs to level up by facing new challenges and learning from them. This means regularly checking your chatbot with new tests and seeing what users have to say about it.

By always testing and updating, your chatbot can stay smart and helpful. It's like giving your chatbot a workout to keep it in top shape!

When we talk about chatbots, we're really talking about their brains - the Natural Language Processing (NLP) tech that lets them chat with us like they're one of our buddies. Testing NLP accuracy and relevance is like making sure your chatbot's brain is sharp and on point. It's all about checking if the chatbot gets what users are saying and if it can come back with the right answers.

To make sure your chatbot is not just smart but also makes sense, you gotta test how well it understands and answers. Think of it like a pop quiz for your chatbot.

Here's a quick checklist to help you test your chatbot's NLP smarts:

Remember, a chatbot that gets it right is a chatbot that'll keep your users coming back for more chit-chat.

When we talk about stress and load testing for chatbots, we're looking at how well the chatbot can handle a lot of users at once. It's like seeing if your chatbot can stay cool under pressure. We want to make sure that as more people start chatting, the bot doesn't get slow or start making mistakes.

To do this, we run tests that mimic lots of users talking to the bot at the same time. This helps us find any weak spots where the chatbot might break down when it gets busy.

Here's what we focus on:

For example, using a tool like Botium for performance testing, we might start with a short stress test. This first test could be just 1 minute long to see how the chatbot does with a quick burst of activity. The results can show us if there are any errors when the chatbot gets a lot of messages all at once.

When we talk about chatbots, we can't ignore the importance of security and compliance. These bots often handle sensitive data, so it's crucial to make sure they're up to snuff on both fronts. Here's what you need to focus on:

Remember, a breach in security or a slip in compliance can not only harm users but also severely damage a company's reputation and trustworthiness.

By focusing on these areas, you can help ensure that your chatbot is both secure and compliant with relevant laws and regulations.

When we talk about chatbots, it's super important to know if they're doing a good job. Key Performance Indicators (KPIs) are like a scorecard for your chatbot. They tell you how well your chatbot is chatting with people and helping them out. Here are some KPIs that are really important to keep an eye on:

Remember, these KPIs can help you make your chatbot even better. By looking at what's working and what's not, you can tweak your chatbot to be more helpful and fun to talk to.

It's also cool to use tools that can track these KPIs for you. Some tools can show you graphs and numbers about how many people are chatting, what they're talking about, and when they like to chat. This info can help you make smart choices about how to improve your chatbot.

When testing chatbots, it's super important to look at how users talk to the bot and how the bot answers back. This helps us make sure the chatbot is easy to chat with and doesn't confuse folks. We want the chatbot to be like a good buddy, knowing when to talk and when to listen.

Remember, the goal is to have a chatbot that feels like a real person, but without the mistakes. It should be able to handle a bunch of different chats without dropping the ball.

Testing isn't just about finding bugs; it's about making sure the chatbot can handle real-life talks with real people. By checking out the conversations, we can find spots where the chatbot might get mixed up and fix them before it chats with more users.

Choosing the right frameworks and tools is a game-changer for chatbot testing. Botium.ai stands out as a comprehensive suite of open-source components that chatbot developers can use to ensure their creations are up to the task. With the right tools, you can automate tests, simulate user interactions, and analyze performance effectively.

When selecting a framework or tool, consider these points:

Remember, the goal is to find tools that not only test the chatbot's functionality but also its ability to engage users in a natural and helpful way.

Frameworks like Botium.ai offer a variety of testing capabilities, from simple unit tests to complex end-to-end scenarios. By integrating these tools into your development process, you can catch issues early and maintain a high standard of quality for your chatbot.

In conclusion, the journey to deploying a successful AI chatbot like Galadon involves meticulous planning, strategic testing, and continuous optimization. From choosing the right tools and platforms to conducting a variety of tests such as A/B, automated, and manual, each step is crucial in ensuring that the chatbot meets the desired performance standards and user expectations. As we've explored, testing strategies like unit, integration, and user acceptance testing are essential to refine the chatbot's interactions. Moreover, post-launch monitoring and agile development practices are key to maintaining its effectiveness. Remember, the ultimate goal is to create a seamless user experience that not only meets but exceeds the capabilities of human representatives, providing immediate, high-quality, and cost-effective customer interactions. With the right approach and dedication to best practices, your AI chatbot can become an invaluable asset to your business, driving conversions and fostering customer satisfaction.

Chatbot testing is the process of evaluating the performance and functionality of a chatbot to ensure it responds correctly to user input, handles conversations effectively, and meets business objectives.

Agile development is crucial in chatbot testing as it allows for continuous integration and feedback, enabling iterative improvements and adjustments to the chatbot based on real user interactions.

The main types of chatbot tests include unit testing, integration testing, and user acceptance testing (UAT), each focusing on different aspects of the chatbot's performance and user experience.

Automated testing allows for consistent and efficient evaluation of predefined scenarios, while manual testing is essential for assessing the chatbot's handling of unexpected or nuanced user interactions.

Key metrics in chatbot testing include response accuracy, user satisfaction, conversation completion rates, and the number of fallbacks to human agents.

A/B testing can be used to compare different versions of a chatbot or conversation flows to determine which one performs better in terms of user engagement, conversion rates, and overall satisfaction.